We need to stop calling everything a “Chatbot.”

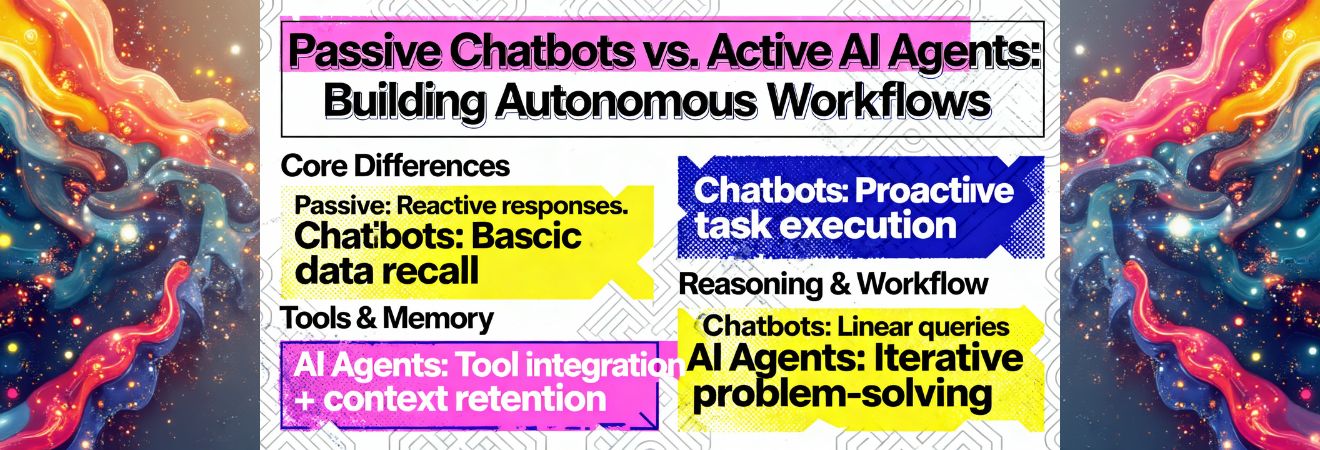

A chatbot is a conversational interface. You say “Hello,” it says “How can I help?” It is passive. It waits for you.

An AI Agent, on the other hand, is active. It has goals. It has tools. It doesn’t wait for you to ask a question; it observes a trigger and sets off to accomplish a mission.

If 2023 was the year of the Chatbot, 2026 is undoubtedly the era of the Agent. Here is why the distinction matters and how you can build goal-oriented workflows today.

Defining “Agency”

What makes an AI an “Agent”? It comes down to a simple loop:

- Thought: The AI analyzes a request.

- Tool Use: The AI decides it needs external information (e.g., search Google, query a database, run a Python script).

- Observation: It looks at the result of that tool’s action.

- Iteration: It decides if the job is done or if it needs to try another tool.

A chatbot talks. An agent does.

Example: The “Research Agent”

Let’s look at a practical use case I recently built. I didn’t want to manually check tech news every morning. So, I built a Research Agent.

- The Goal: “Find the top 3 AI news stories from the last 24 hours, summarize them, and draft a LinkedIn post.”

- The Workflow:

- The Agent uses a Search Tool (like Serper or Tavily) to browse the web.

- It visits specific URLs to read the content.

- It reflects on the content: “Is this relevant to Fatih’s audience?”

- It drafts the post and sends it to my Slack for approval.

I didn’t script how to find the news. I gave it the goal and the tools. The Agent figured out the rest.

The Role of Memory (Vector Databases)

Agents need context. If you ask an agent to “handle a refund,” it needs to know your refund policy.

This is where Vector Databases (like Pinecone, Qdrant, or Weaviate) act as the Agent’s long-term memory. Instead of stuffing a PDF into a prompt, the Agent can “query” its memory to find the exact paragraph about “Refunds for damaged goods” before making a decision.

Real-World Autonomy

Imagine a Customer Support Agent that doesn’t just answer questions but actually resolves issues.

- User: “My package is lost.”

- Chatbot: “I’m sorry to hear that. Please email support.”

- Agent: Checks the shipping API -> Sees the delay -> Issues a $10 credit to the user’s account -> Reschedules delivery -> Emails the user with the new tracking number.

The shift from “talking” to “acting” is where the real ROI of AI lies.

Conclusion

Building agents requires a mindset shift. You are no longer writing scripts; you are managing digital employees. You give them a job description (System Prompt), a toolbox (APIs), and a performance review.

Are you ready to hire your first digital worker?